I'm James Harrison, and this is what I do.

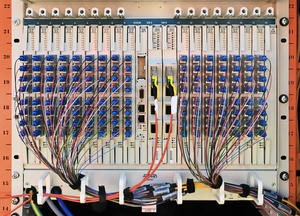

Fibre Optics & Telecoms

I enjoy connecting people - telecoms is how I do it. Particularly in rural areas, like the village I live in, it's vital to have good connectivity for work and life in the modern world.

Networks I've architected and specified now cover roughly 250,000 rural and hard-to-reach homes in the UK with gigabit full-fibre internet connections, using both point-to-point Ethernet and the latest passive optical network (PON) technologies.

Astrophotography

I love astrophotography, and operate a robotic imaging system in my back garden using software and tools I've built myself. Photos I've taken have appeared in the Sky at Night magazine.

Mostly I image galaxies and small-scale objects using a 200mm reflecting telescope, but also do mosaic projects for widefield imaging.

I also enjoy photography, focusing on landscapes and nature.

Software and Systems

I'm an enthusiastic coder, writing Rust, Python, and Go; as well as frontend code with TypeScript, Vue, and friends. I design and build software professionally - such as fibre optic test automation tools - as well as maintaining open source projects.

I also do systems design and implementation; everything from data and enterprise architecture, to cloud infrastructure design and deployment automation, to customer and business critical infrastructure for internet service providers.

This site has evolved a few times. I’ve had a blog of some sort here since 2008, but this spot on the internet - named from a love of music and cryptography - is mine, and now serves as more of a personal hub these days for the projects I’ve worked on and my hobbies.